The EU AI Act - Requirements

Introduction

What is the EU AI Act?

The EU Artificial Intelligence Act (EU 2024/1689, “AI Act”) is the first comprehensive legal framework for AI systems.

Organisations developing, deploying, importing, or distributing AI systems in the European Union must understand their obligations under this regulation, which became applicable in stages beginning from August 2024.

Key Takeaways

The AI Act applies to providers, deployers, importers, and distributors of AI systems placed on the EU market or whose output is used in the EU

Risk-based approach: Obligations scale according to the risk level and organisation’s role

Phased implementation: Some obligations already in force; high-risk obligations from August 2026; limited-risk transparency rules from August 2025

Substantial penalties: Fines up to €35 million or 7% of global annual turnover for non-compliance

This section includes a complete overview of the EU AI Act and its key obligations. It explains who the EU AI Act applies to, when the key obligations enter into force and what are the concequences for noncompliance.

Table of contents

Scope and Applicability

AI System Risk Classification

Roles and Responsibilities

General Purpose AI models (GPAIs)

Concequences of non-compliance

Additional Resources

EU AI Act Requirements

Scope and Applicability

The EU Artificial Intelligence Act (Regulation 2024/1689, “EU AI Act”) establishes a comprehensive legal framework for artificial intelligence systems (AI systems). Organisations developing, deploying, importing, or distributing AI systems in the European Union must understand their obligations under this regulation.

The EU AI Act has a broad territorial scope, which means that it can apply to organisations outside the EU. The EU AI Act applies to:

providers placing AI systems on the EU market or putting them into service in the EU, regardless of where they are established

deployers located in the EU

providers and deployers located in third countries, where the output produced by the AI system is used in the EU

importers and distributors of AI systems in relation to the EU market.

There are certain, limited exemptions to the EU AI Act. The AI Act shall not apply to:

AI systems used exclusively for military or defence purposes

AI systems used solely for scientific research and development

AI systems used for personal, non-professional purposes

AI systems based on

The AI Act is implemented in phases, which means that the obligations start to apply on different dates. Some key provisions, such as the ban on prohibited AI are already in force. The next key application phase is on 2nd of August 2026, when high-risk AI system obligations become fully applicable.

2. Roles and Responsibilities

The EU AI Act establishes different roles that determine the extent of obligations. The entities covered include legal and natural persons, public authorities, agencies, and other bodies.

Provider

Definition: A natural or legal person, public authority, agency or other body that develops an AI system or a general-purpose AI model or that has an AI system or a general-purpose AI model developed and places it on the market or puts the AI system into service under its own name or trademark, whether for payment or free of charge

Key Obligations:

Ensure compliance with AI Act requirements before placing it on the market

For high-risk AI systems: register AI system to EU database, establish post-market monitoring, report serious incidents etc.

Practical examples:

A US company develops an AI system and makes it available for EU customers

A healthcare organisation has an AI system developed with an ICT partner to assess patient fall risk and makes it available nationally, free of charge

Rule of thumb: If you develop or have an AI system developed for you, you are likely a provider.

Important note: Making substantial modifications to an AI system or putting your trademark on it may create a "provider presumption"—meaning you will be considered a provider even if you didn't originally develop the system.

Deployer

Definition: A natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity

Key Obligations:

Ensure AI literacy (i.e. training and instructions for staff)

High-Risk AI Systems: Use AI systems according to instructions for use, conduct a FRIA (fundamental rights assessment), monitor system operation, ensure human oversight and report serious incidents

Ensure transparency (i.e. inform users of AI use, where applicable)

Practical examples:

A hospital using diagnostic AI to analyse medical images

A public authority using AI or offering AI tools to enhance employee efficiency

A company using AI recruitment tools to screen job applications (note: high-risk AI system)

Rule of thumb: If you use an AI system in your professional capacity, you are a deployer.

Importer

Definition: A natural or legal person located or established in the Union that places on the market an AI system that bears the name or trademark of a natural or legal person established in a third country

Key Obligations: The importer must ensure provider’s contact information is available and collaborative with authorities. For high-risk AI systems, they must verify conformity assessment and technical documentation and register the AI system in EU database.

Practical example: An EU-based company imports AI software developed by a US company and distributes it in Europe under the US company's brand.

Distributor

Definition: a natural or legal person in the supply chain, other than the provider or the importer, that makes an AI system available on the EU market

Key Obligations: Ensuring required documentation is accompanied with the system, reporting non-compliance, verifying conformity/CE-marking for high-risk AI systems

Practical example: A software reseller that makes AI systems available to customers without modifying them.

Authorised Representative

Definition: A natural or legal person located or established in the EU who has received and accepted a written mandate from a provider of an AI system or a general-purpose AI model to, respectively, perform and carry out on its behalf the obligations and procedures established by the EU AI Act

Key obligations: acting on behalf of the provider, verifying technical documentation (and conformity), cooperating with the authorities.

Practical Example:

A pharmaseuticals company based in the US appoints an authorized representative for EU markets for an AI system evaluating cancer growth from CT scans.

An AI software company based in Singapore develops a general purpose AI model and plans to make it available for EU market - an authorized representative must be appointed.

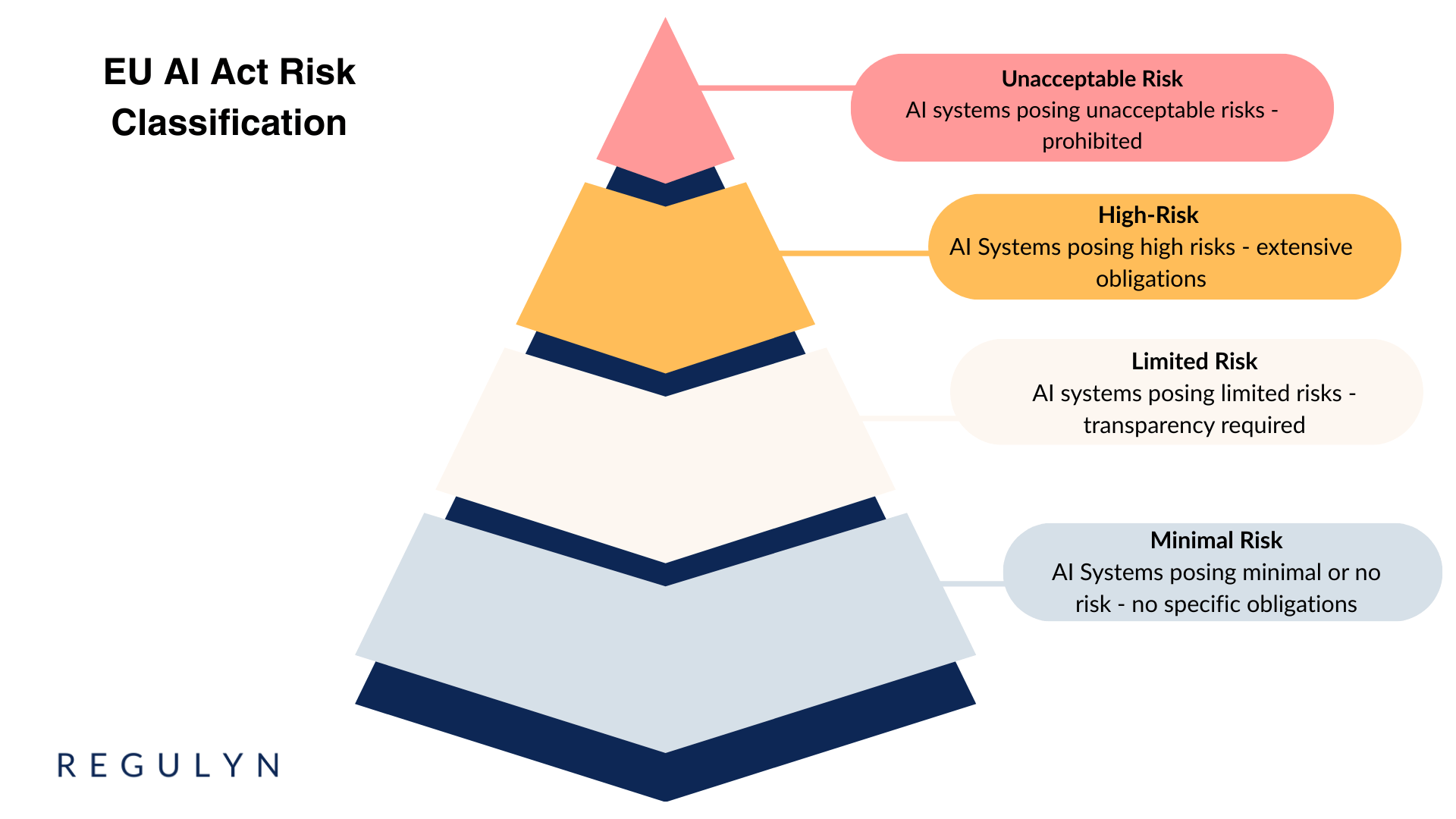

3 AI System Risk Classification

The EU AI Act is based on a risk-based approach and establishes four risk categories for AI systems with corresponding obligations. Companies, public organizations, and natural or legal persons must comply with the obligations that vary based on the AI system risk level.

Prohibited AI - Unacceptable Risk

Prohibited AI systems, that pose unacceptable risk must not be placed on the market, put into service or used within EU. Prohibited practices (i.e. prohibited AI systems) include:

Subliminal manipulation

Exploitation of vulnerabilities (e.g. age, disability, socio-economic situation)

Social scoring by public authorities

Real-time remote biometric identification in public spaces for law enforcement (narrow exceptions exist)

Biometric categorisation using sensitive attributes

Emotion recognition in workplace and education

Indiscriminate scraping of facial images

Inference of emotions in law enforcement, border control, workplace (limited exceptions)

Practical examples:

A company cannot develop or deploy (use) emotion recognition to assess employee motivation, engagement or dissatisfaction.

A tech company cannot place an AI-powered facial images scraping software on the EU market

Important note: The ban applies even if the AI feature is not intended to be used but is active. For example, even of the AI feature is not indended to be used for evaluating employees emotions but they are, in fact, recognized (e.g. sentiment analysis of speech, automated analysis on video conference apps)

Key obligations:

Must not be placed on the market, used or put into service in EU (obligation effective from 2nd of August 2024)

High Risk AI System

High-Risk AI systems are subject to extensive obligations under the EU AI Act. There are two pathways for classifying the AI system as high-risk.

Safety Components as High-Risk AI

AI systems intended as safety components of products covered by EU harmonisation legislation listed in Annex I, or products themselves covered by such legislation are high-risk AI systems

Practical examples: AI in medical devices, AI in vehicles, AI in toys

High-Risk AI systems defined in Annex III

AI systems used in specified high-risk areas are considered high-risk AI. High-Risk areas and use cases include:

Biometric identification

Education and vocational training

AI in education, determening access to education

AI assessing students

Employment and recruitment

AI in recruitment, e.g. screening applications

AI for evaluating employee performance

Access to private or public services

AI used for creditworthiness assessment

AI assessing eligibility to public services

Law enforcement

AI for assessing re-offending risk

Migration and border control

AI for examining visa applications or detecting falsified documents

Administation of justice

AI used for drafting judgements and drawing up legal resources

Practical examples:

A public organisation uses AI to screen documents to determine if applicants qualify for social benefits

A company develops an AI-assisted software to evaluate severity of the patients depression or anxiety (medical device software). The software is simultaneously a high-risk AI system.

Key obligations for High-Risk Systems:

Risk management system (Article 9)

Data governance and quality requirements (Article 10)

Technical documentation (Article 11 and Annex IV)

Automatic logging (Article 12)

Transparency and information to deployers (Article 13)

Human oversight (Article 14)

Accuracy, robustness, and cybersecurity (Article 15)

Quality management system (Article 17)

Conformity assessment (Article 43)

CE marking (Article 49)

Transparency (Article 50)

EU database registration (Article 71)

Post-market monitoring (Article 72)

Serious incident reporting (Article 73)

Important notes:

An Annex III AI system is not considered high-risk if it performs a narrow procedural task, improves a human activity, or detects decision-making patterns without materially influencing outcomes, provided human assessment is not replaced. (De minimis exception)

Many of the extensive obligations are placed on the provider of the high-risk AI system.

Limited-Risk AI System

AI systems posing limited risks are mainly required with transparency obligations. Limited risks AI systems are not explicitly defined in the EU AI Act.

Limited risk AI could include:

AI interacting with natural persons

AI generating content (synthetic audio, image, video or text content)

Key obligations:

labelling AI generated content as such

informing the user that they are interacting with AI, unless it is obvious

informing the user they are subject to emotion recognition or biometric categorization

disclose that content is AI manipulated (deepfakes, text published to inform public - limited exceptions)

Important notes:

Transparency obligations apply to providers (AI systems interacting with natural persons, generating content) and deployers (emotion recognition, biometric categorization, deepfakes)

Transparency obligations may rise from other EU and national regulation (e.g. GDPR)

Practical examples:

a company launches a AI chatbot to reply generic customer requests

a public organization implements an AI chatbot to assist citizens in navigating their website

Minimal-Risk AI System

The EU AI Act does not pose any specific requirements for AI Systems posing minimal risk. Minimal risk AI systems are not explicitly specified. Minimal Risk AI systems may include:

spam filters

AI enhanced video editing.

Key obligations:

No AI Act specific obligations, providers may voluntarily adopt codes of conduct

Important notes:

Even if there are no AI Act specific requirements, other regulations apply

4 General-Purpose AI Models (GPAI)

General- Purpose AI Models is an AI system which is based on a general-purpose AI model and which has the capability to serve a variety of purposes, both for direct use as well as for integration in other AI systems.

The EU AI Act poses additional obligations on GPAIS. The key obligations are listed below and will be discussed in more detail on a specific guidance site.

Key obligations:

producing technical documentation (testing, training)

producing and making necessary information and documentation available for downstream providers

put in place a copyright policy

providing a summary of training content and making it publicly available

for GPAIs with systemic risk: model evaluation, adversial testing, tracking and reporting serious incidents, cybersecurity measures

Important notes:

A deployer or provider of a high-risk or limited risk AI system should carefully consider the possibility that they may become a provider of a GPAI. This could potentially be the case of a GPAI is used for the development of the system and the new system is placed on the market under the provider’s name (e.g. a system based on ChatGPT model with significant modifications).

5 Concequences of Non-Compliance

The EU AI Act introduces one of the strictest enforcement regimes in EU law. Penalties are designed to ensure that organisations take AI governance seriously and can demonstrate control over their systems.

Non-compliance with the EU AI Act can lead to administrative fines up to 35 million euros or 7 % of global annual turnover. The amount of the administrative fine varies based on the seriousness of the offence:

For non-compliance with the prohibition of AI practices (35 million or 7 % of the annual turnover, whichever is higher)

For non-compliance with role-based obligations of providers, deployers, importers, distributors authorised representatives (15 million or 3 % of global annual turnover)

For supplying incorrect or incomplete information to authorities (7,5 million or 1 % of global annual turnover).

For SMEs and start-ups, the fines can be up to 7 %, 3 % or 1 % of global annual turnover. Additionally, there is a separate administrative fine for intentional or negligent non-compliance with GPAI obligations (up to 15 million or 3 % of global annual turnover).

In addition to the administrative fines, the authorities have enforcement and monitoring powers. The authorities may, for example, impose operational restrictions such as withdrawal from the market, revoking CE-marking or ordering suspension on the AI system.

Practical examples:

A company uses a videoconference app with automated ‘engagement analysis" feature. This qualifies as emotion recognition at workplace and could result in a fine up to 35 million or 7 % of global annual turnover.

A private healthcare company deploys AI system for analysing CT-scans without ensuring proper human oversight. This is a violation of the deployer’s obligations for a high-risk AI system and could lead to a fine up to 15 million or 1 % of global annual turnover.

A public organisation deploys high-risk AI system (e.g. for recruiting, healthcare) without conducting a fundamental rights assessment. This is a violation of the deployer’s obligations for a high-risk AI system and can lead to a fine up to 15 million or 3 % of global annual turnover.

A company develops an AI chatbot without including a machine readable notice and features to inform the user that they are interacting with AI. This is a violation of transparency requirements for limited- risk AI and could lead to a fine up to 7.5 million or 1 % of global annual turnover.

Most leaders believe the percentage cap applies but in most cases, it does not. Under the EU AI Act, if the percentage of turnover is lower than the fixed maximum fine, the higher fixed fine cap applies. In practice, this means that any organisation with turnover from 0,5 million to 500 million can face the same fines - up to 35 million.

6 Additional Resources

This page provides a high-level overview of the key requirements under the EU AI Act. Its purpose is to help organisations understand the main obligations, identify their role under the regulation and recognise when more detailed analysis is needed.

We are currently preparing more in-depth guidance on specific topics, including high-risk AI documentation, sector-specific impacts, AI governance steps and research exemption. These resources will be published gradually on our Knowledge Centre to support organisations as the regulation enters into full application.

For those who want to explore the regulation and guidelines more, the following official sources are useful starting points:

EU AI Act (final text, EUR-Lex)

Guidelines on AI system definition (Publication of the Commission)

Guidelines on prohibited AI practices (Publication of the Comission)

FAQ (AI Act Service Desk)

Our aim is to provide clear, practical and trustworthy information. If you need tailored support in interpreting the AI Act requirements for your organisation, please contact us directly.

Frequently asked questions (FAQ)

-

The EU Artificial Intelligence Act is a new regulation that applies to all AI systems placed on the EU market or used within the EU. It is often referred to as (“AI Act”).

The AI Act introduces a wide range of obligations. The scope of these requirements depends on the organisation’s role (such as provider, deployer or distributor) and the system’s risk level. Non-compliance can result in administrative fines of up to €35 million.

-

The EU AI Act requires organisations to maintain documentation proving the safety, transparency and compliance of their AI systems.

In practice, at minimum, this means:

AI system directory with system name, risk level and other key details;

documentation for safety/governance processes

transparency documentation

AI training material or log of trainings held (AI literacy)

an AI appendix for agreements, where relevant.

For high-risk AI, the documentation requirements are extensive, especially for providers. The key documentation includes technical documentation, risk management files and FRIA. Additionally, a conformity assessment and post-market monitoring plan may be required.

For general purpose AI-models (GPAIs) there are additional requirements. These include documentation covering models, training data, testing, risk mitigation, user guidance and monitoring.

More comprehensive guidance on documentation is available by requesting advisory support or visiting Regulyn Knowledge Center.

-

The EU AI Act classifies AI systems into four risk categories.

Unacceptable Risk

AI systems that threaten fundamental rights are prohibited and cannot be developed or used in the EU.

Examples: AI used for social scoring or exploiting vulnerabilities of specific groups.High Risk

High-risk AI systems must meet strict requirements, including security, governance, transparency and documentation measures. Many AI use cases in healthcare, education, critical infrastructure and human resources fall into this category.

Examples: AI used for cancer detection, or systems used to assess job applicants.Limited Risk

Limited-risk AI systems are subject to transparency obligations. Users must be informed when they are interacting with an AI system.

Example: AI-assisted customer service chatbots

(Note: the public sector faces additional restrictions.)Minimal Risk

Minimal-risk AI systems are not subject to specific obligations under the AI Act.

Example: basic spam filtering. -

Now.

Some obligations, such as the obligation to ensure adequate AI literacy and the prohibitions on certain AI use cases, are already applicable.

The provisions governing high-risk AI systems will enter into force next, with full applicability expected in 2026.

-

Everything starts with a clear overview of how AI is currently used in your organisation. Begin by mapping your existing AI use cases. This allows you to prioritise systems based on their risk level.

A practical way to get started is to build on processes you already have in place, such as data protection risk assessments. It is also important to appoint a responsible person or team for AI governance.

In short: map your AI use, prioritise by risk and establish a governance process.

Tip: If you need clarity or more detailed guidance on AI governance, contact a Regulyn expert.