EU AI Act Explained - Risk Categories

Introduction

Many organisations are preparing for the EU AI Act and asking the same questions:

what is the risk level of our AI system?

what counts as high-risk AI?

is a chatbot high-risk or limited risk?

This article will explain the EU AI Act risk categories with practical examples.

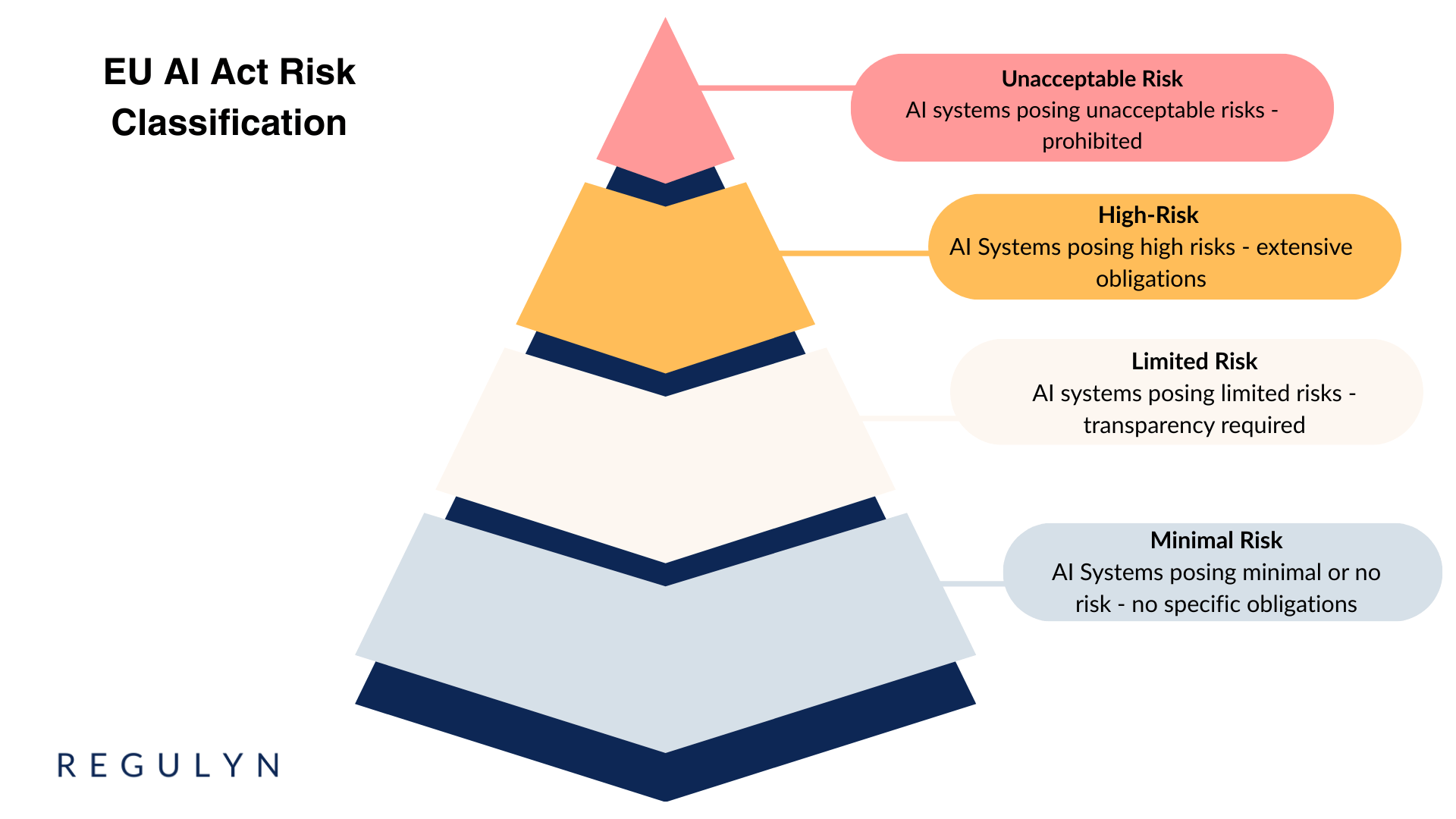

The EU AI Act is based on a risk-based approach and establishes four risk categories for AI systems with corresponding obligations. Companies, public organizations, and natural or legal persons must comply with the obligations that vary based on the AI system risk level.

What are prohibited AI practices (or systems)?

Prohibited AI systems, that pose unacceptable risk must not be placed on the market, put into service or used within EU. Prohibited practices (i.e. prohibited AI systems) include:

Subliminal manipulation

Exploitation of vulnerabilities (e.g. age, disability, socio-economic situation)

Social scoring by public authorities

Real-time remote biometric identification in public spaces for law enforcement (narrow exceptions exist)

Biometric categorisation using sensitive attributes

Emotion recognition in workplace and education

Indiscriminate scraping of facial images

Inference of emotions in law enforcement, border control, workplace (limited exceptions)

Practical examples:

A company cannot develop or deploy (use) emotion recognition to assess employee motivation, engagement or dissatisfaction.

A tech company cannot place an AI-powered facial images scraping software on the EU market

Important note: The ban applies even if the AI feature is not intended to be used but is active. For example, even of the AI feature is not indended to be used for evaluating employees emotions but they are, in fact, recognized (e.g. sentiment analysis of speech, automated analysis on video conference apps)

Key obligations:

Must not be placed on the market, used or put into service in EU (obligation effective from 2nd of August 2024)

What are High-Risk AI Systems?

High-Risk AI systems are subject to extensive obligations under the EU AI Act. There are two pathways for classifying the AI system as high-risk.

Safety Components as High-Risk AI

AI systems intended as safety components of products covered by EU harmonisation legislation listed in Annex I, or products themselves covered by such legislation are high-risk AI systems

Practical examples: AI in medical devices, AI in vehicles, AI in toys

High-Risk AI systems defined in Annex III

AI systems used in specified high-risk areas are considered high-risk AI. High-Risk areas and use cases include:

Biometric identification

Education and vocational training

AI in education, determening access to education

AI assessing students

Employment and recruitment

AI in recruitment, e.g. screening applications

AI for evaluating employee performance

Access to private or public services

AI used for creditworthiness assessment

AI assessing eligibility to public services

Law enforcement

AI for assessing re-offending risk

Migration and border control

AI for examining visa applications or detecting falsified documents

Administation of justice

AI used for drafting judgements and drawing up legal resources

Practical examples:

A public organisation uses AI to screen documents to determine if applicants qualify for social benefits

A company develops an AI-assisted software to evaluate severity of the patients depression or anxiety (medical device software). The software is simultaneously a high-risk AI system.

Key obligations for High-Risk Systems:

Risk management system (Article 9)

Data governance and quality requirements (Article 10)

Technical documentation (Article 11 and Annex IV)

Automatic logging (Article 12)

Transparency and information to deployers (Article 13)

Human oversight (Article 14)

Accuracy, robustness, and cybersecurity (Article 15)

Quality management system (Article 17)

Conformity assessment (Article 43)

CE marking (Article 49)

Transparency (Article 50)

EU database registration (Article 71)

Post-market monitoring (Article 72)

Serious incident reporting (Article 73)

Important notes:

An Annex III AI system is not considered high-risk if it performs a narrow procedural task, improves a human activity, or detects decision-making patterns without materially influencing outcomes, provided human assessment is not replaced. (De minimis exception)

Many of the extensive obligations are placed on the provider of the high-risk AI system.

What is a Limited-Risk AI System?

AI systems posing limited risks are mainly required with transparency obligations. Limited risks AI systems are not explicitly defined in the EU AI Act.

Limited risk AI could include:

AI interacting with natural persons

AI generating content (synthetic audio, image, video or text content)

Key obligations:

labelling AI generated content as such

informing the user that they are interacting with AI, unless it is obvious

informing the user they are subject to emotion recognition or biometric categorization

disclose that content is AI manipulated (deepfakes, text published to inform public - limited exceptions)

Important notes:

Transparency obligations apply to providers (AI systems interacting with natural persons, generating content) and deployers (emotion recognition, biometric categorization, deepfakes)

Transparency obligations may rise from other EU and national regulation (e.g. GDPR)

Practical examples:

a company launches a AI chatbot to reply generic customer requests

a public organization implements an AI chatbot to assist citizens in navigating their website

What are the obligations for Minimal-Risk AI Systems?

The EU AI Act does not pose any specific requirements for AI Systems posing minimal risk. Minimal risk AI systems are not explicitly specified. Minimal Risk AI systems may include:

spam filters

AI enhanced video editing.

Key obligations:

No AI Act specific obligations, providers may voluntarily adopt codes of conduct

Important notes:

Even if there are no AI Act specific requirements, other regulations apply

Summary

The EU AI Act is based on a risk-based approach and establishes four risk categories for AI systems:

Prohibited AI (Banned): Systems like workplace emotion recognition, social scoring, and indiscriminate facial scraping cannot be used in the EU at all. This ban took effect on August 2, 2024.

High-Risk AI (Strict Rules): AI used in critical areas (HR, education, law enforcement, credit scoring etc.) must meet extensive requirements including risk management, documentation, human oversight, and safety testing.

Limited-Risk AI (Transparency Required): Chatbots and content generators must disclose they are AI-powered and label AI-generated content so users know what they are interacting with.

Minimal-Risk AI (No Special Rules): Everyday tools like spam filters have no AI Act requirements, though other EU laws still apply.

Organizations need to identify which category their AI falls into and follow the corresponding rules. If you need tailored support in interpreting the AI Act requirements for your organisation, please contact us directly.